Health literacy measurement: a comparison of four widely used health literacy instruments (TOFHLA, NVS, HLS-EU and HLQ) and implications for practice

Rebecca L. Jessup A B * , Alison Beauchamp C , Richard H. Osborne D , Melanie Hawkins D Rachelle Buchbinder EA

B

C

D

E

Abstract

Health literacy has evolved from a focus on individual skills to an interactive process influenced by relationships and the health system. Various instruments measure health literacy, developed from different conceptions and often for different measurement purposes. The aim of this study was to compare the properties of four widely used health literacy instruments: Test of Functional Health Literacy in Adults (TOFHLA), Newest Vital Sign (NVS), European Health Literacy Survey (HLS-EU-Q47), and Health Literacy Questionnaire (HLQ).

This was a within-subject study comparing instrument performance. Composite reliability and Cronbach’s alpha was used to measure internal consistency, floor/ceiling effects determined discriminate ability across low-to-high score ranges, and Spearman’s R correlation coefficient was used to assess the relationship between instruments, particularly scales aiming to measure similar constructs.

Fifty-nine patients consented, with 43 completing all four instruments. Internal consistency was high for all scales (composite reliability range 0.76–0.95). Floor and ceiling effects were observed, with TOFHLA demonstrating the largest ceiling effect (>62) and NVS the only floor effect (18%). Only moderate correlations were found between TOFHLA and NVS (r = 0.60) and between HLS-EU-Q47 and HLQ scales (r ~0.6).

Our study found low to moderate correlations between the instruments, indicating they measure different constructs of health literacy. Clinicians and researchers should consider the intended measurement purpose and constructs when choosing an instrument. If the purpose of measurement is to understand reading, comprehension, and numeracy skills in individuals and populations, then performance based functional health literacy instruments such as the TOFHLA and NVS will be suitable. However, if the purpose is to generate insights into broader elements of health literacy, including social supports and relationships with health providers, then the HLS-EU and HLQ may be useful. The findings highlight the need for careful instrument selection to ensure meaningful and appropriate data interpretation. As improving population health literacy is a national priority in many countries, it is important that clinicians and researchers understand the measurement differences offered by different instruments to assist them to choose the right instrument for their measurement purpose.

Keywords: comparison, health literacy, instruments, measurement, properties, psychometric, tools.

Introduction

Over the past three decades, the conceptualisation of health literacy has evolved from a focus on an individuals’ attributes and skills toward an interactive process, influenced and mediated by supportive relationships at home and with health providers and the health system at large. Actions to develop the health literacy of individuals have subsequently broadened from initiatives that support reading, comprehension, and numeracy skill development to health system improvements that support people to access, understand, appraise, remember, and use health information and services to promote and manage their health (Nutbeam 2000, 2021).

There are now several widely used instruments to measure health literacy, but each instrument has been developed from different conceptions of health literacy (Jordan et al. 2011). These conceptual differences relate to the measurement purpose of each instrument (Hawkins et al. 2021). Subsequently, the differences between what each instrument measures can present challenges for researchers and clinicians when deciding which instrument will generate data that are appropriate, meaningful, and useful for their research or clinical purpose.

Instruments that measure health literacy fall largely into one of two categories: first generation performance-based measures that assess functional health literacy, and more recent self-report measures that aim to capture health literacy as a multidimensional construct (i.e. include items beyond reading and writing ability) but include measures of functional health literacy in self-report format. Performance-based measures of health literacy determine how a person objectively performs on a test (in this case, of numeracy and/or reading comprehension) while self-report instruments ask an individual to respond about their own perception of their abilities, behaviours, attitudes, and experiences. Two of the most widely used performance-based measures of health literacy are the Test of Functional Health Literacy in Adults (TOFHLA) (Parker et al. 1995) and the Newest Vital Sign (NVS) (Weiss et al. 2005), while two of the most widely used multidimensional self-report measures are the European Health Literacy Survey (HLS–EU-47) (Sørensen et al. 2013) and the Health Literacy Questionnaire (HLQ) (Osborne et al. 2013).

The newer multidimensional self-report instruments measure a broader concept of health literacy than earlier instruments, but the data from these different types of instruments are often interpreted in similar ways and used for similar decision-making purposes (Jordan et al. 2011; Haun et al. 2012). Data interpretations are often directed at determining a ‘level’ of health literacy (e.g. high or low, adequate or inadequate), with a focus on using data to identify what are seen as health literacy deficits in individuals or groups and populations (Nutbeam 2009; Nutbeam and Muscat 2020), and inform (usually clinical) practice (Al Sayah et al. 2013; Matsuoka et al. 2016). However, there is a growing global momentum to focus on the health literacy assets (i.e. strengths) of individuals and communities and to address community-identified health literacy needs (Nutbeam 2009; Dias et al. 2021; Hawkins et al. 2021; Osborne et al. 2022a). This shift in the use of health literacy data necessitates an understanding of the different constructs captured by the different instruments to ensure that data-derived inferences are appropriate, meaningful, and useful for the intended purpose. Similarly, it must be determined if validity evidence can verify the use of a health literacy instrument for a particular measurement purpose and context. For example, to understand the full range of health literacy competencies in a given population, there must be evidence that an instrument can differentiate between people with different competencies. If the purpose of measurement is to measure change in an individual’s health literacy over time, then there must be evidence that an instrument demonstrates adequate breadth, reliability, and sensitivity to be able to measure this change.

To date, there are no studies that have compared the performance of these health literacy instruments in a head-to-head study. The aim of this study was to compare the TOFHLA, NVS, HLS-EU-47, and HLQ using internal consistency and floor and ceiling effects, and to explore the correlation between measures.

Materials and methods

This is a within-subject study comparing related health literacy measures within the same patient. Patients attending Northern Health, a large metropolitan hospital in Melbourne, Australia, for day hospital procedures (including infusions, transfusions, venesection, and trial of void) were invited to participate (including people from migrant and refugee backgrounds). The hospital is located in an area of high socioeconomic disadvantage with a higher number of migrants and refugees, and higher rates of unemployment, than State averages (Jessup et al. 2022; Jessup et al. 2023).

All consecutive eligible patients were approached on 2 days per week for the study duration. Instruments were administered at a time that was convenient to their care and the needs of the clinical staff. Exclusion criteria were <18 years of age, cognitive impairment, and those unable to converse in English. Participants were first approached by a nurse on the ward, who would advise the lead author if the patient was capable, comfortable, and willing to participate. The lead author then approached the patient, explained the study, and gained written informed consent. Recruitment was consecutive until minimum sample size was reached. The instruments were administered in random order to prevent administration order bias. Participation was voluntary and participants were informed that they could choose to end their participation if they were fatigued or distressed. In cases where participants were unable to self-administer an instrument but they were still interested in participating, the lead author verbally administered the HLS-EU-Q47 and HLQ only (as these instruments could be administered in interview form).

Instruments

In 2000, Nutbeam proposed a three-tiered model to describe individual health literacy skills:

Functional health literacy: the basic skills required to read health information;

Interactive/communicative health literacy: the advanced cognitive, literacy, and social skills that allow an individual to interpret, derive meaning of, and apply health information;

Critical health literacy: the advanced skills, which, together with social skills, can be used to analyse information related to health and allow individuals to exert greater control over their circumstances.

This model suggests that health literacy skills are on a continuum. Development of an individual’s health literacy may need to also consider additional concepts including (from the practice-based World Health Organization (WHO) model for health literacy development (World Health Organization 2022)):

Health literacy responsiveness: the extent to which health workers, services, systems, organisations, and policy makers recognise and accommodate diverse health literacy strengths and needs.

Community health literacy: centred around family, peer, and other community conversations, and reliant on developing community assets related to health literacy.

Table 1 displays an overview of the four health literacy instruments chosen for this study, including instrument purpose (as described by developers), mode of delivery, and time to complete. In addition, each has been mapped to both the Nutbeam (2000) and WHO (Osborne et al. 2022b) models of health literacy to provide an overview of the type of ‘health literacy’ information that is gathered by each instrument. Each individual instrument is outlined in greater detail below.

| Nutbeam model of health literacy skills (2000) | WHO model for health literacy development (2021) | Format | |||||

|---|---|---|---|---|---|---|---|---|

Functional | Interactive | Critical | Individual | Responsiveness | Community | |||

TOFHLA measurement purpose: To assess functional health literacy (Parker et al. 1995) Administration mode: Timed test Administration time: 22 min Scoring: Summary score out of 100 combining reading comprehension and numeracy scales. Adequate health literacy if the TOFHLA score is 75–100, marginal health literacy if it is 60–74, and inadequate health literacy if the score is 0–59. | ||||||||

Reading comprehension | ✓ | ✓ | Correct answers according to developer instructions | |||||

Numeracy | ✓ | ✓ | Cloze procedure | |||||

NVS measurement purpose: To rapidly screen for limited literacy (Weiss et al. 2005) Administration mode: Timed test Administration time: 3–6 min Scoring: Summary score out of 6, with 0–1 high likelihood of limited literacy, 2–3 possibility of limited health literacy, and 4–6 adequate health literacy. | ||||||||

Literacy (reading, interpretation, numeracy) | ✓ | ✓ | Correct answers using score card | |||||

HLS-EU-Q47 measurement purpose: To measure the health literacy (3 domains) of populations (Sørensen et al 2013) Administration mode: Interview or self-administered Administration time: 20–30 min Scoring: Summary score, with 0–25 indicating inadequate, >25–33 indicating problematic health literacy, >33–42 indicating sufficient health literacy and >42–50) indicating excellent health literacy. | ||||||||

1. Healthcare | ✓ | ✓ | Five-point response options: 1 = very difficult 2 = difficult 3 = easy 4 = very easy 5 = (don’t know – interviewer only) | |||||

2. Disease prevention | ✓ | ✓ | ||||||

3. Health promotion | ✓ | ✓ | ✓ | |||||

HLQ measurement purpose: To measure profiles of health literacy strengths and needs (across nine domains) for population health surveys and to inform the development and evaluation of health literacy interventions (Osborne et al. 2013) Administration mode: Interview or self-administered Administration time: 7–30 min Scoring: No summary score or classification of health literacy level. HLQ provides nine individual scores based on an average of the items within each of the nine scales. | ||||||||

1. Feeling understood and supported by healthcare providers | ✓ | ✓ | ✓ | Four-point response options: 1 = strongly disagree 2 = disagree 3 = agree 4 = strongly agree | ||||

2. Having sufficient information to manage my health | ✓ | ✓ | ||||||

3. Actively managing my health | ✓ | ✓ | ✓ | |||||

4. Social support for health | ✓ | ✓ | ✓ | ✓ | ||||

5. Appraisal of health information | ✓ | ✓ | ✓ | |||||

6. Ability to actively engage with healthcare providers | ✓ | ✓ | ✓ | Five-point response options: 1 = cannot do or always difficult 2 = usually difficult 3 = sometimes difficult 4 = usually easy 5 = always easy | ||||

7. Navigating the healthcare system | ✓ | ✓ | ||||||

8. Ability to find good health information | ✓ | ✓ | ✓ | ✓ | ||||

9. Understand health information well enough to know what to do | ✓ | ✓ | ||||||

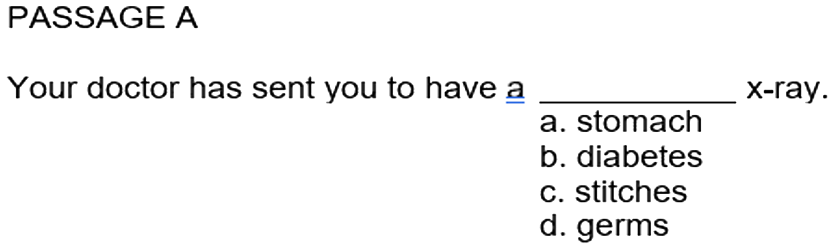

The TOFHLA was developed to provide physicians with an objective, performance-based measure of functional health literacy with the goal of identifying patients’ ability to read health-related materials (Parker et al. 1995). It consists of two scales: a 50-item literacy test assessing reading comprehension (TOFHLA Reading comprehension), and a 17-item numeracy test (TOFHLA Numeracy). The TOFHLA Reading comprehension scale uses a modified Cloze procedure: every 5th to 7th word in a passage is omitted and four multiple-choice options are provided, one of which is correct and three of which are similar but grammatically or contextually incorrect. An example of one of the TOHLA reading comprehension scale questions is provided in Fig. 1.

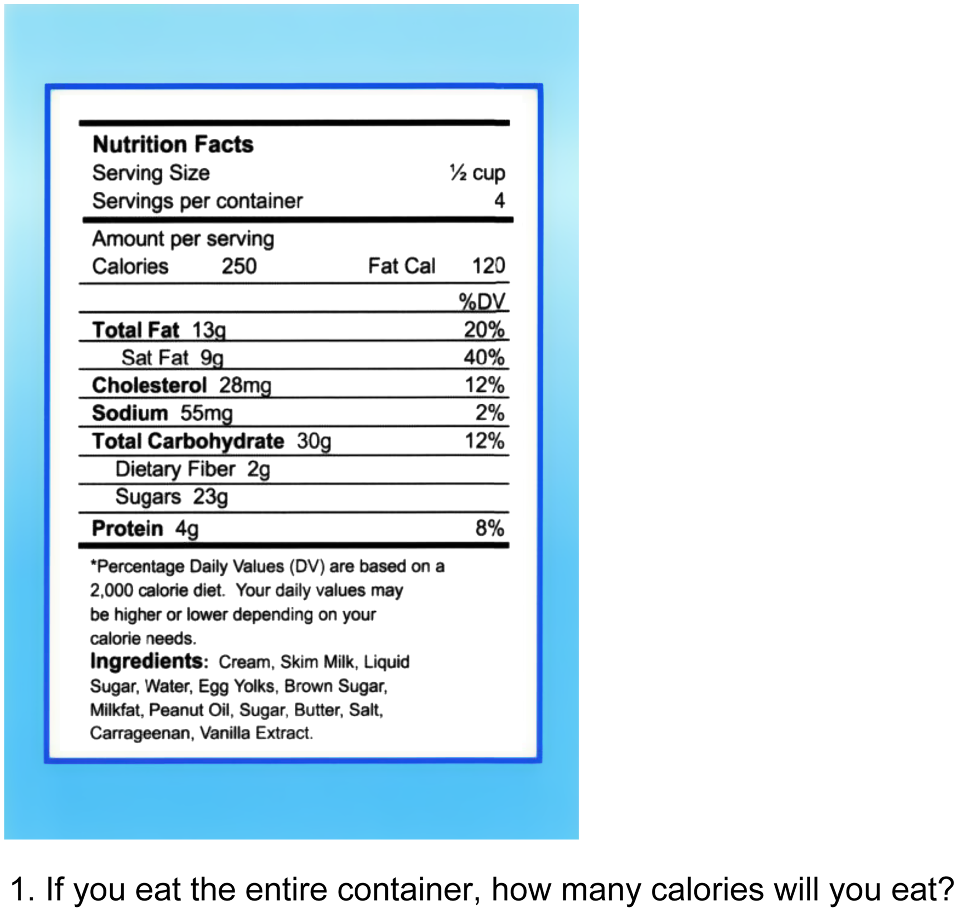

The 17-item TOFHLA Numeracy scale uses hospital forms and prescription labels to test comprehension. The Australian version of the TOFHLA was used in this study (Barber et al. 2009). The TOFHLA Numeracy scale is weighted to create a score from 0 to 50. This is then added to the TOFHLA Reading comprehension scale score (also scored 0 to 50) yielding a score from 0 to 100. An example of a question in the TOFHLA Numeracy scale is provided in Fig. 2.

The NVS was developed by a panel of experts in health literacy (Weiss et al. 2005). The test was designed to provide a quick screen for limited functional health literacy, with the goal of understanding which patients have limited literacy in the primary healthcare setting. The NVS consists of a nutrition label from an ice cream container accompanied by six questions. There are four numeracy questions that ask the participant to either interpret the label or calculate equations using the information on the label. Two further questions can be administered if one of the first four questions is answered incorrectly. These two questions assess the reader’s ability to comprehend the product contents written on the label. The label and an example of one of the questions are outlined in Fig. 3.

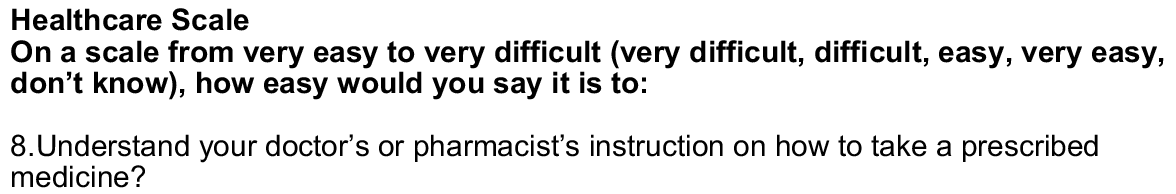

The HLS-EU-Q47 was developed by the European Health Literacy Consortium following a review of the literature and development of a theoretical framework (Sørensen et al. 2013). The goal of the instrument is to comprehensively measure health literacy in populations. Items address aspects of functional, interactive, and critical health literacy in one scale across three domains. The instrument can be self-completed or administered by interview and has 47 items across the domains that cover elements of accessing, understanding, appraising, and applying information. An overall score is calculated with cut-off thresholds for inadequate, problematic, sufficient, or excellent health literacy. Data about the health literacy of individuals are collated and interpreted as the ability of populations to access, understand, appraise, and apply health information across the three domains: Healthcare (functional health literacy); Disease prevention (some items include aspects of interactive health literacy); and Health promotion (some items include aspects of critical health literacy). Items in the Healthcare and Disease prevention scales relate to settings in which individual health literacy is measured. Some items in the Health promotion scale relate to a community health literacy setting in terms of what individuals know about health education and promotion in their community. An overall health literacy score and separate scale scores are calculated for each participant. Scores are standardised on a scale ranging from 0 to 50. An example of a question from the Healthcare scale is provided in Fig. 4.

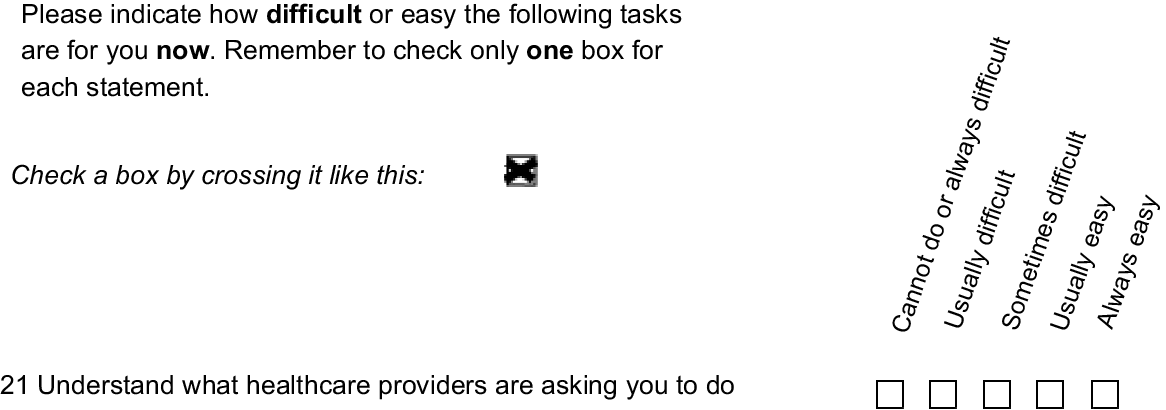

The HLQ was developed using a grounded validity-driven approach to capture the multidimensional construct of health literacy from both patient and clinician perspectives (Osborne et al. 2013), with the goal of creating a measure that reflects the multidimensional nature of health literacy. The grounded approach consisted of interviews and concept mapping workshops with community members, health practitioners, hospital managers, and policy makers. The nine HLQ scales are independent questionnaires and result in nine scale scores – a single total score is not generated (Elsworth et al. 2016). There are 44 questions across the following nine scales: 1. Feeling understood and supported by health care providers; 2. Having sufficient information to manage my health; 3. Actively managing my health; 4. Social support for health; 5. Appraisal of health information; 6. Ability to actively engage with healthcare providers; 7. Navigating the healthcare system; 8. Ability to find good health information; and 9. Understand health information well enough to know what to do. Item scores within each scale are summed and divided by the number of items in the scale with all items having equal weighting. The HLQ scale scores do not have cut-off points: a lower scale score indicates potential need in relation to the scale construct while a higher score indicates a potential strength (Beauchamp et al. 2015). Data are used to identify profiles of health literacy strengths, needs, and preferences of individuals, communities, and populations. While items in all nine HLQ scales relate to individual health literacy, some scales have one or more items that relate to health literacy responsiveness and community health literacy. Fig. 5 provides an example of a question from scale 9. Understand health information well enough to know what to do.

Data analysis

For each scale within each instrument, composite reliability (CR) (Raykov 2008) and Cronbach’s alpha (Nunnelly 1978) were calculated to estimate internal consistency reliability. Where an instrument consisted of multiple scales or domains, internal consistency was measured and compared separately for each scale or domain, to gain better understanding of which scale items are interrelated. We used both CR and alpha measures because Cronbach’s alpha is known to underestimate reliability when the item loadings in a single-factor model are not equal, and CR additionally allows calculation of 95% confidence intervals (CIs) (Hair et al. 2013). CIs were calculated using a bootstrapping method (Raykov and Marcoulides 2011, p. 168). It is not possible to compute CR for dichotomous indicators using Raykov’s approach (see Raykov 2008, p 176). This means we were unable to calculate CR for the TOFHLA Reading comprehension scale.

Floor and ceiling effects were assessed to determine the ability of scales to detect variance between respondents. Floor and ceiling effects are defined as the percentage of participants scoring the minimum (floor) and maximum (ceiling) possible scores (Ho and Carol 2015). We used the proportion of respondents with scores in the upper and lower 15% of possible score ranges for all scales, and defined floor and ceiling effects as more than 15% of the sample reporting the lowest or highest score range (Driban et al. 2015). We therefore considered floor and ceiling effects significant if they impacted ≥15% of respondents, moderate if 10% to <15%, minor if 5% to <10%, and negligible if <5% (Gulledge et al. 2019).

Spearman’s rank order correlation coefficient (r) was used to explore associations between pairs of scale scores (within and between instruments). An r of <0.6 was considered to be a weak to moderate association, indicating the scales may measure different constructs. An r of 0.6–0.8 was considered to be a strong association, indicating scales likely measure similar constructs; and an r of >0.8 was considered a very strong association, indicating two scales are principally parallel measures of the same construct (Cohen 2013).

We expected that measures of the same construct would be moderately correlated (Spearman’s rank order correlation coefficient r > 0.6). Specifically, we anticipated that measures with content clearly related to functional health literacy (i.e. TOFHLA Reading comprehension and Numeracy scales, the NVS, the HLS-EU-Q47 Healthcare scale and the HLQ scale 9. Understand health information enough to know what to do) would be correlated r > 0.6. This moderate correlation was chosen because the value is widely accepted as indicating that two scales are broadly measuring the same construct (Cohen 2013).

Using Cohen’s guideline of 0.2 being the difference between scales that measure similar constructs (0.6–0.8) and those that are strongly related (0.8–0.9), we calculated that the required sample size (n) would be ~38 participants to provide sufficient power (0.80) to detect a correlation within 0.2 between the scales, assuming a two-tailed test with a significance level (α) of 0.05 and a population variance (σ2) of 0.2 (alpha = 0.05) when r = 0.8 (Bonett and Wright 2000). We therefore aimed to recruit at least 44 participants to complete all four instruments (taking into consideration potential for missing data) for comparison.

The statistical analysis software IBM SPSS 22 was used to calculate Cronbach’s alpha and ANOVA, and MPlus (Muthén and Muthén 1998) was used to calculate CR. All other analyses were conducted using StataSE Version 13.

Results

Fifty-nine of 100 consecutive patients approached consented to participate. Three participants were excluded from the final analysis due to missing data. Mean age of participants was 57 years (s.d. 15, range 32 to 86), 33 were female (58%) (Table 2). Non-participants were slightly younger with a mean age of 57 years (s.d. 18, (range 18 to 91) and slightly more (68%) were female. No further information was available for non-participants.

| Demographic | n (%) | |

|---|---|---|

| Mean age in year (s.d.) | 56 (15) | |

| Gender | ||

| Female | 34 | |

| Male | 25 | |

| Highest level of education | ||

| Did not complete high school | 28 (51%) | |

| Completed high school | 15 (27%) | |

| Trade certificate/Technical and Further Education (TAFE) qualification | 6 (11%) | |

| Diploma or bachelor degree | 7 (13%) | |

| Employment status | ||

| Disability pension/workcover | 8 (14%) | |

| Home duties | 7 (13%) | |

| Employed part time | 5 (9%) | |

| Employed full time | 10 (18%) | |

| Retired | 22 (40%) | |

| Other (unemployed, volunteer worker) | 4 (7%) | |

| Household annual income | ||

| A$00,00–$49,000 | 25 (45%) | |

| A$49,999–$74,999 | 5 (8%) | |

| A$75,000–$99,000 | 1 (2%) | |

| Over A$100,000 | 3 (5%) | |

| Rather not say | 20 (36%) | |

| Country of birth | ||

| Australia | 35 (64%) | |

| Asia (Burma and India) | 2 (3%) | |

| Britain | 3 (5%) | |

| Africa (Sudan) | 1 (2%) | |

| European (Italy, Macedonia, Malta, Greece, Croatia and Holland) | 12 (22%) | |

| New Zealand | 2 (3%) | |

| Pacific Islands (Samoa) | 1 (2%) | |

| Current smoker | 17 (31%) | |

| Three or more chronic conditions | 15 (25%) |

Forty-nine participants completed three or more instruments, with 43 participants completing all four. Seven participants completed two instruments. Respondent fatigue was the primary reason for non-completion, with time to complete all four instruments ranging from 41 minutes to 2 hours. In addition, among those completing only two instruments, five were unable to complete the NVS and TOFHLA due to visual impairments or illiteracy. Four participants partially completed three or more instruments and their data were excluded.

All scales demonstrated satisfactory to high CR (CR range 0.76–0.95) and internal consistency reliability (α > 0.77) (Table 3). The HLS-EU-Q47 Health promotion scale had the highest CR (0.95 [CI 0.91 to 0.96], 16 items). The TOFHLA Numeracy scale had the lowest CR (0.76 [CI 0.59 to 0.83], 17 items). Cronbach’s alpha and CR were similar across the scales.

| Scale | No. of scale items | AlphaA (CI) | Composite reliability (CI) | TOFHLA numeracy | TOFHLA reading | NVS | HLS-EU- Q47 HC | HLS-EU- Q47 DP | HLS-EU- Q47 HP | 1. HLQ HPS | 2. HLQ HSI | 3. HLQ AMH | 4. HLQ SS | 5. HLQ AA | 6. HLQ AE | 7. HLQ NHS | 8. HLQ FHI | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| TOFHLA | Numeracy | 17 | 0.77 (0.67–0.86) | 0.76 (0.59–0.83) | |||||||||||||||

| Reading comprehension | 50 | 0.94 (0.91–0.96) | NAB | 0.44 | |||||||||||||||

| NVS | NVS | 6 | 0.80 (0.71–0.88) | 0.81 (0.71–0.86) | 0.60 | 0.60 | |||||||||||||

| HLS-EU-Q47 | Healthcare | 16 | 0.92 (0.890.95) | 0.92 (0.87–0.95) | 0.04 | 0.23 | 0.07 | ||||||||||||

| Disease Prevention | 15 | 0.91 (0.87–0.94) | 0.91 (0.85–0.94) | −0.03 | 0.18 | 0.03 | 0.78 | ||||||||||||

| Health Promotion | 16 | 0.94 (0.92–0.97) | 0.95 (0.91–0.96) | −0.05 | 0.13 | 0.01 | 0.57 | 0.73 | |||||||||||

| HLQ | 1. Healthcare provider support | 4 | 0.84 (0.77–0.9) | 0.87 (0.79–0.90) | −0.12 | −0.04 | −0.07 | 0.29 | 0.32 | −0.01 | |||||||||

| 2. Having sufficient information | 4 | 0.88 (0.77–0.93) | 0.89 (0.80–0.93) | −0.07 | −0.06 | −0.10 | 0.58 | 0.54 | 0.44 | 062 | |||||||||

| 3. Actively managing health | 5 | 0.84 (0.70–0.91) | 0.84 (0.73–0.91) | −0.15 | −0.18 | 0.02 | 0.23 | 0.27 | 0.09 | 0.58 | 0.68 | ||||||||

| 4. Social support for health | 5 | 0.80 (0.71–0.86) | 0.81 (0.74–0.85) | −0.07 | 0.01 | −0.10 | 0.32 | 0.43 | 0.25 | 0.74 | 0.67 | 0.61 | |||||||

| 5. Appraisal of health information | 5 | 0.85 (0.73–0.91) | 0.84 (0.73–0.90) | −0.13 | −0.18 | −0.31 | 0.17 | 0.30 | 0.12 | 0.52 | 0.58 | 0.64 | 0.56 | ||||||

| 6. Active engagement with healthcare providers | 5 | 0.89 (0.81–0.94) | 0.89 (0.83–0.94) | −0.16 | −0.05 | −0.13 | 0.63 | 0.40 | 0.23 | 0.55 | 0.73 | 0.44 | 0.51 | 0.46 | |||||

| 7. Navigating the healthcare system | 6 | 0.80 (0.66–0.88) | 0.80 (0.69–0.87) | −0.16 | −0.10 | −0.22 | 0.63 | 0.44 | 0.25 | 0.44 | 0.69 | 0.37 | 0.36 | 0.46 | 0.89 | ||||

| 8. Ability to find good health information | 5 | 0.77 (0.62–0.86) | 0.77 (0.67–0.85) | −0.02 | 0.14 | −0.01 | 0.42 | 0.34 | 0.24 | 0.20 | 0.48 | 0.19 | 0.23 | 0.50 | 0.63 | 0.74 | |||

| 9. Understand health information well enough to know what to do | 5 | 0.90 (0.87–0.93) | 0.91 (0.87–0.93) | −0.11 | 0.21 | 0.08 | 0.65 | 0.57 | 0.44 | 0.25 | 0.47 | 0.17 | 0.21 | 0.27 | 0.73 | 0.71 | 0.78 |

NB: Spearman correlation coefficients; <0.6 indicates the two scales measure different constructs, 0.6 to 0.8 scales are measuring similar constructs, 0.8 to 0.9 scales measure strongly related construct, >0.9 the two scales probably measure the same construct (51). The spearman correlation co-efficients that are in BOLD are significant at P < 0.05.

CI, Confidence Interval; TOFHLA, Test of Functional Health Literacy in Adults; NVS, Newest Vital Sign; HLS-EU-Q47, European Health Literacy Survey (47 Questions); HLQ, Health Literacy Questionnaire; HC, Health Care; DP, Disease Prevention; HP, Health Promotion; HPS, Healthcare provider support; HSI, Having Sufficient Information; AMH, Actively Managing Health; SS, Social Support for Health; AA, Appraisal of Health Information; AE, Active Engagement with healthcare providers; NHS, Navigation the healthcare system; FHI, Finding Health Information well enough to know what to do.

Table 4 provides an overview of the number of participants who completed each scale, the mean and median scores for the scale, skewness and kurtosis, and floor and ceiling effects for all scales within each instrument (histograms are also available in the Supplementary material). More participants were able to complete the self-report instruments, HLS-EU-Q47 (n = 52) and the HLQ (n = 55). The short time required to administer the NVS (n = 51) resulted in more responses for this than for the TOFHLA (n = 47), which had the least number of participant completions. Interestingly, the mean score for the TOFHLA (86 overall combined mean score after weighting the numerical scale) places the group’s health literacy in the excellent range for this instrument, while the mean score of just under three for the NVS places the group’s health literacy in the ‘possible limited literacy’ range. Group mean scores for the HLS-EU-47 indicate that participants were strongest in items on the Healthcare scale while they were weakest in the Health promotion scale. The HLQ mean scores indicate a range of strengths and challenges for participants, with participants strongest in 1. Feeling understood and supported by health care providers; 4. Social support for health, 6. Ability to actively engage with health care providers; and 9. Understand health information well enough to know what to do, while people experience challenges in scales 3. Actively managing my health and 5. Appraisal of health information. The TOFHLA Reading comprehension scale exhibited a negative skewness (−2.15) and a high kurtosis (7.71), indicating a left skew where most scores are concentrated towards the higher end. Similarly, the TOFHLA Numeracy scale also demonstrated a negative left skew (−1.67) and kurtosis (6.12). In contrast, the NVS demonstrated near-zero skewness (0.03) and moderate kurtosis (6.12), which points to a symmetrical distribution of scores. The HLQ scales all have slight negative skewness, except for 7. Navigating the healthcare system, which has a skewness of 0.00, indicating a symmetrical distribution. The data skewness is reflected in the ceiling effects, which ranged from 11% to 68%. Apart from the NVS, all scales demonstrated some ceiling effects with more than 15% of the sample located in the top 15% of the scale range. The TOFHLA Reading comprehension and Numeracy scales showed the largest ceiling effect, with 68% and 62% of individuals scoring in the top 15% of the scale range, respectively. The NVS was the only scale demonstrating floor effects (18%).

| Instrument | Subscale | Scores | FloorA | CeilingB | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Score range | N | Mean | s.d. | Skewness | Kurtosis | n | % | n | % | |||

| TOFHLA | Reading comprehension | 0 to 50 | 47 | 43.56 | 7.78 | −215 | 7.71 | 0 | 0 | 29 | 62 | |

| Numeracy | 0 to 17 | 47 | 14.61 | 0.28 | −1.67 | 6.12 | 0 | 0 | 31 | 66 | ||

| NVS | 0 to 6 | 51 | 2.86 | 1.95 | 0.03 | 1.94 | 9 | 18 | 6 | 11 | ||

| HLS-EU | Healthcare | 1 to 4 | 52 | 3.25 | 0.44 | −0.22 | 3.09 | 0 | 0 | 30 | 58 | |

| Disease prevention | 1 to 4 | 52 | 3.11 | 0.45 | 0.31 | 2.80 | 0 | 0 | 16 | 31 | ||

| Health promotion | 1 to 4 | 52 | 2.79 | 0.66 | 0.36 | 2.44 | 0 | 0 | 15 | 29 | ||

| HLQ | 1. Healthcare provider support | 1 to 4 | 55 | 3.36 | 0.57 | −0.81 | 3.49 | 0 | 0 | 29 | 53 | |

| 2. Having sufficient information | 1 to 4 | 55 | 3.14 | 0.53 | −0.41 | 4.09 | 0 | 0 | 13 | 24 | ||

| 3. Actively managing health | 1 to 4 | 55 | 3.06 | 0.61 | −0.62 | 4.27 | 0 | 0 | 16 | 29 | ||

| 4. Social support for health | 1 to 4 | 55 | 3.37 | 0.50 | −0.33 | 2.52 | 0 | 0 | 28 | 51 | ||

| 5. Active appraisal of health information | 1 to 5 | 55 | 3.00 | 0.61 | −0.35 | 3.61 | 0 | 0 | 0 | 0 | ||

| 6. Active engagement with healthcare providers | 1 to 5 | 55 | 4.12 | 0.61 | −0.45 | 2.96 | 0 | 0 | 20 | 36 | ||

| 7. Navigating the healthcare system | 1 to 5 | 55 | 3.96 | 0.58 | 0.00 | 2.89 | 0 | 0 | 15 | 27 | ||

| 8. Ability to find good health information | 1 to 5 | 55 | 3.90 | 0.59 | −0.09 | 2.44 | 0 | 0 | 13 | 24 | ||

| 9. Understand health information well enough to know what to do | 1 to 5 | 55 | 4.13 | 0.64 | −0.47 | 2.37 | 0 | 0 | 23 | 42 | ||

We were interested in understanding the correlation between scales with content aiming to measure an individual’s functional health literacy. Specifically, we were interested in correlations between the TOFHLA, NVS, the HLS-EU-Q47 Healthcare scale and the HLQ scales 5. Appraisal of health information, 8. Ability to find good health information, and 9. Understand health information well enough to know what to do. There was a moderate correlation between both scales of the TOFHLA (Numeracy and Reading comprehension scales) and the NVS (r = 0.60 for both). There was no substantial correlations between any of the scales that used performance-based tests to assess functional health literacy (TOFHLA and NVS), and the multidimensional self-report instruments (HLS-EU-Q57 and the HLQ).

There was weak correlation between the HLS-EU-Q47 Healthcare scale and HLQ 1. Feeling understood and supported by healthcare providers. With the exception of the HLQ scale 3. Actively managing my health, there was low to moderate correlations between the HLS-EU-Q47 Healthcare scale and all other HLQ scales (r = 0.32 to 0.57), indicating that these scales capture somewhat related constructs. The highest correlation was r = 0.89 and was seen between two scales of the HLQ, 6. Ability to actively engage with healthcare providers and 7. Navigating the healthcare system.

Discussion

In this study we compared four commonly used health literacy instruments. Each of them aimed to measure functional health literacy in some way, however, there was only low to moderate correlation between instruments. In particular, the scales that aimed to measure functional health literacy (both of the TOFHLA scales, the NVS, the HLS-EU-Q47 Healthcare scale, and the HLQ scale 9. Understand health information well enough to know what to do) did not demonstrate any strong correlations. The TOFHLA was only moderately correlated with the NVS, while the HLS-EU-Q47 Healthcare scale demonstrated low to moderate correlations across all HLQ scales except scale 3.

There may be several methodological and conceptual explanations for the finding of little to no correlation between these scales. In terms of methodological explanations, these instruments were designed in different geographical contexts (HLS-EU-Q47 was designed in Europe, the HLQ in Australia, and the NVS and TOFHLA in the US). Health literacy is influenced by cultural and contextual factors, and some instruments may be more culturally sensitive and relevant to certain populations, which may lead to better performance on those scales (Warnecke et al. 1997). In addition, the response format and scale design of each instrument was different: even the HLQ and HLS-EU-Q47, which both use Likert scales, had slightly different response options that may have yielded different results, even when questions appear similar.

In terms of conceptual explanations, a potential reason for the low correlation between the performance-based functional health literacy instruments (NVS and TOFHLA) and the scales that measure functional health literacy on the multidimensional self-report instruments (HLS-EU-Q47 Healthcare scale, and the HLQ scale 9. Understand health information well enough to know what to do) may relate to the different approaches used to assess functional health literacy. The TOFHLA and NVS employ direct testing methods, assessing participants’ actual ability to read and understand information. In contrast, the HLS-EU-Q47 and HLQ rely on self-report of individuals’ experiences and abilities, including their perceived difficulty in performing specific health-related tasks and understanding medical forms. The use of self-report tools for measuring functional health literacy has some potential limitations when compared with performance measures. Respondents may not be fully aware of their own skills and abilities, leading to inflated or deflated self-reported views of competency (Hawkins et al. 2017), a phenomenon that has previously been described (Mabe and West 1982). Despite these limitations, self-reported assessments offer important benefits. They allow respondents to consider other factors that might influence their health literacy, such as social support received from a spouse or caregiver. For example, the HLQ scales enable clinicians or researchers to understand the role of social support in helping individuals comprehend and utilise health information effectively. People with limited functional health literacy may rely on caregivers as surrogate readers, compensating for their own deficiencies (Baker et al. 2002). While performance-based instruments provide direct insights into an individuals’ skills, self-report instruments offer a broader perspective, considering other factors that may mitigate the impact of limited functional health literacy.

The finding of skewness and ceiling effects were common across the HLQ, HLS-EU-Q47, and TOFHLA. These instruments may not discriminate well between people with high levels of health literacy but may be more likely to detect small differences between people with low and very low levels of health literacy, which is important for understanding and responding equitably to people experiencing health literacy challenges (WHO report; Osborne et al. 2022b). While the NVS was more evenly distributed, there were floor effects, which was similar to findings in other studies (Salgado et al. 2013; Tseng et al. 2018). This suggests that the NVS may not be able to discriminate between people with low to very low levels of literacy. The finding of moderate skew and ceiling effects for the HLQ have also been previously reported (Beauchamp et al. 2015; Rademakers et al. 2020) as well as skew and ceiling effects for the HLS-EU-Q47 (Duong et al. 2017; Pelikan et al. 2019). We could find no evidence of other studies reporting that the TOFHLA has high ceiling effects. It is possible that the study sample had a higher-than-average health literacy, which could contribute to higher score ranges than might be seen in a general population sample.

The high CR for the HLS-EU-47 Health promotion scale suggests there may be some item redundancy, which is likely to have been addressed with the more recent short versions (16 items in total) of the instrument. The high correlation between HLQ scales 6. Active engagement with healthcare providers and 7. Navigating the Healthcare system have previously been investigated, with findings concluding that at least two sources of reliable and substantive common factor variance may be represented: variance associated with the scale domains and variance associated with a general health literacy factor (Elsworth et al. 2022). Despite some evidence of correlation between some HLQ scales, the Elsworth study results support the current scoring of nine separate scales.

Implications for practice

A question arising from the results of this study is whether clinicians and researchers should rely on their patients/participants to self-report their own ability to find and use health information if their results are not likely to be correlated with performance measures that directly test reading comprehension ability. Clearly, these two types of measures measure different things. Ongoing application of self-report measures are important for several reasons. Self-reported measures capture a broader view of an individual’s health literacy, providing insights into their confidence, self-efficacy, and experience, all of which will impact an individual’s ability to understand health information beyond their performance on a reading skill assessment. These measures consider context-specific information such as social support, cultural factors, and individual experiences that performance-based measures do not capture. Encouraging individuals to self-report their abilities can empower them to reflect on their skills and identify areas for improvement, fostering patient engagement. Additionally, self-reported measures can help identify psychological and practical barriers to finding and using health information, guiding healthcare providers to tailor interventions and support services effectively. This data is valuable for research and policy development, informing public health interventions and strategies.

Strengths and limitations

This is the first study to directly compare first generation performance measures of functional health literacy (TOFHLA and NVS) with the more recently developed self-report instruments (HLS-EU-47 and HLQ). Importantly, our sample included people who may experience health literacy challenges who were recruited from a hospital population located in an area of socioeconomic disadvantage. The consecutive recruitment and face-to-face interviews sought to ensure the inclusion of people with healthcare challenges who are often excluded or under-represented in telephone or mail surveys. We sought to minimise potential respondent distress or burden and to avoid an important type of bias by randomising the order in which each instrument was administered. The sample size, while small, was appropriate to answer our research question, which was about the attributes of the instruments, rather than about making generalisations about the population. In-depth interviews that explored people’s actual experiences in responding to the questions, and identified whether individual test scores concorded with their self-perceived individual abilities and engagement with the health system, would have strengthened the study.

Conclusion

Improving individual and population health literacy is now a national priority for many countries. This study found that the TOFHLA, NVS, HLS-EU, and HLQ are only moderately or weakly associated with each other, indicating that while they are all measures of health literacy, they do measure different things. Therefore when choosing an instrument, clinicians and researchers need to be cognisant of their intended measurement purpose (what sorts of decisions will be made based on score interpretations) and the types of health literacy constructs the different instruments measure.

Conflicts of interest

RHO and RB created the HLQ, one of the instruments tested in this paper. RHO and RB were not involved in the collection and preparation of data. The authors declare no other conflicts of interest.

Declaration of funding

The data used in this paper were collected as part of the PhD thesis of the first author, titled ‘Co-design of health literacy interventions to improve understanding, access and use of health services (2018)’. RLJ was funded by a National Health and Medical Research Council (NHMRC) PhD Scholarship #1075250. RHO was funded in part by a NHMRC Senior Research Fellowship #APP1059122. Alison Beauchamp was funded by an ARC Linkage Industry Fellowship. RB was funded by an NHMRC Senior Principle Research Fellowship #APP1082138. NHMRC and ARC were not involved in the research or preparation of this article.

Acknowledgements

The authors thank Mani Suleiman and Professor Gerald Elsworth who assisted with the psychometric and statistical analysis for this paper. In addition, we thank the nursing staff from Craigieburn Health Service who assisted in recruitment and the patients who gave up their time to participate.

References

Baker DW, Gazmararian JA, Williams MV, et al. (2002) Functional health literacy and the risk of hospital admission among Medicare managed care enrollees. American Journal of Public Health 92, 1278-1283.

| Crossref | Google Scholar | PubMed |

Al Sayah F, Williams B, Johnson JA (2013) Measuring health literacy in individuals with diabetes: a systematic review and evaluation of available measures. Health Education & Behavior 40, 42-55.

| Crossref | Google Scholar | PubMed |

Barber MN, Staples M, Osborne RH, et al. (2009) Up to a quarter of the Australian population may have suboptimal health literacy depending upon the measurement tool: results from a population-based survey. Health Promotion International 24(3), 252–261. 10.1093/heapro/dap022

Beauchamp A, Buchbinder R, Dodson S, et al. (2015) Distribution of health literacy strengths and weaknesses across socio-demographic groups: a cross-sectional survey using the Health Literacy Questionnaire (HLQ). BMC Public Health 15, 678.

| Crossref | Google Scholar |

Bonett DG, Wright TA (2000) Sample size requirements for estimating pearson, kendall and spearman correlations. Psychometrika 65, 23-28.

| Crossref | Google Scholar |

Dias S, Gama A, Maia AC, et al. (2021) Migrant communities at the center in co-design of health literacy-based innovative solutions for non-communicable diseases prevention and risk reduction: application of the OPtimising HEalth LIteracy and Access (Ophelia) process. Frontiers in Public Health 9, 639405.

| Crossref | Google Scholar |

Driban JB, Morgan N, Price LL, et al. (2015) Patient-reported outcomes measurement information system (PROMIS) instruments among individuals with symptomatic knee osteoarthritis: a cross-sectional study of floor/ceiling effects and construct validity. BMC Musculoskeletal Disorders 16, 253.

| Crossref | Google Scholar |

Duong TV, Aringazina A, Baisunova G, et al. (2017) Measuring health literacy in Asia: validation of the HLS-EU-Q47 survey tool in six asian countries. Journal of Epidemiology 27, 80-86.

| Crossref | Google Scholar | PubMed |

Elsworth GR, Beauchamp A, Osborne RH (2016) Measuring health literacy in community agencies: a Bayesian study of the factor structure and measurement invariance of the health literacy questionnaire (HLQ). BMC Health Services Research 16, 508.

| Crossref | Google Scholar | PubMed |

Elsworth GR, Nolte S, Cheng C, Hawkins M, Osborne RH (2022) Modelling variance in the multidimensional Health Literacy Questionnaire: does a general health literacy factor account for observed interscale correlations? SAGE Open Medicine 10, 20503121221124771.

| Crossref | Google Scholar |

Gulledge CM, Smith DG, Ziedas A, et al. (2019) Floor and ceiling effects, time to completion, and question burden of PROMIS CAT domains among shoulder and knee patients undergoing nonoperative and operative treatment. JBJS Open Access 4, e0015.

| Crossref | Google Scholar | PubMed |

Haun J, Luther S, Dodd V, et al. (2012) Measurement variation across health literacy assessments: implications for assessment selection in research and practice. Journal of Health Communication 17, 141-159.

| Crossref | Google Scholar | PubMed |

Hawkins M, Gill SD, Batterham R, et al. (2017) The Health Literacy Questionnaire (HLQ) at the patient-clinician interface: a qualitative study of what patients and clinicians mean by their HLQ scores. BMC Health Services Research 17, 309.

| Crossref | Google Scholar |

Hawkins M, Elsworth GR, Nolte S, et al. (2021) Validity arguments for patient-reported outcomes: justifying the intended interpretation and use of data. Journal of Patient-Reported Outcomes 5, 64.

| Crossref | Google Scholar |

Ho A, Carol C (2015) Descriptive statistics for modern test score distributions: skewness, kurtosis, discreteness, and ceiling effects. Educational and Psychological Measurement 75, 365-388.

| Crossref | Google Scholar | PubMed |

Jessup RL, Awad N, Beauchamp A, Bramston C, Campbell D, Semciw A, Tully N, Fabri AM, Hayes J, Hull S, Clarke AC (2022) Staff and patient experience of the implementation and delivery of a virtual health care home monitoring service for COVID-19 in Melbourne, Australia. BMC Health Services Research 22(1), 911.

| Crossref | Google Scholar |

Jessup RL, Bramston C, Putrik P, et al. (2023) Frequent hospital presenters’ use of health information during COVID-19: results of a cross-sectional survey. BMC Health Services Research 23(1), 616.

| Crossref | Google Scholar | PubMed |

Jordan JE, Osborne RH, Buchbinder R (2011) Critical appraisal of health literacy indices revealed variable underlying constructs, narrow content and psychometric weaknesses. Journal of Clinical Epidemiology 64, 366-379.

| Crossref | Google Scholar | PubMed |

Mabe PA, West SG (1982) Validity of self-evaluation of ability: a review and meta-analysis. Journal of Applied Psychology 67, 280-296.

| Crossref | Google Scholar |

Matsuoka S, Kato N, Kayane T, et al. (2016) Development and validation of a heart failure–specific health literacy scale. Journal of Cardiovascular Nursing 31, 131-139.

| Crossref | Google Scholar | PubMed |

Nutbeam D (2000) Health literacy as a public health goal: a challenge for contemporary health education and communication strategies into the 21st century. Health Promotion International 15, 259-267.

| Crossref | Google Scholar |

Nutbeam D (2009) Defining and measuring health literacy: what can we learn from literacy studies? International Journal of Public Health 54, 303-305.

| Crossref | Google Scholar |

Nutbeam D, Muscat DM (2020) Advancing health literacy interventions. In ‘Health literacy in clinical practice and public health 2020’. pp. 115-127. (IOS Press) 10.3233/SHTI200026

Osborne RH, Batterham RW, Elsworth GR, et al. (2013) The grounded psychometric development and initial validation of the Health Literacy Questionnaire (HLQ). BMC Public Health 13, 658.

| Crossref | Google Scholar | PubMed |

Osborne RH, Cheng CC, Nolte S, et al. (2022a) Health literacy measurement: embracing diversity in a strengths-based approach to promote health and equity, and avoid epistemic injustice. BMJ Global Health 7, e009623.

| Google Scholar |

Osborne RH, Elmer S, Hawkins M, et al. (2022b) Health literacy development is central to the prevention and control of non-communicable diseases. BMJ Global Health 7, e010362.

| Google Scholar |

Parker RM, Baker DW, Williams MV, et al. (1995) The test of functional health literacy in adults: a new instrument for measuring patients’ literacy skills. Journal of General Internal Medicine 10, 537-541.

| Crossref | Google Scholar |

Pelikan JM, Ganahl K, Van Den Broucke S, et al. (2019) Measuring health literacy in Europe: Introducing the European Health Literacy Survey Questionnaire (HLS-EU-Q). In ‘International Handbook of Health Literacy’. pp. 115–138. 10.51952/9781447344520.ch008

Rademakers J, Waverijn G, Rijken M, et al. (2020) Towards a comprehensive, person-centred assessment of health literacy: translation, cultural adaptation and psychometric test of the Dutch Health Literacy Questionnaire. BMC Public Health 20, 1850.

| Crossref | Google Scholar |

Salgado TM, Ramos SB, Sobreira C, et al. (2013) Newest Vital Sign as a proxy for medication adherence in older adults. Journal of the American Pharmacists Association 53, 611-617.

| Crossref | Google Scholar | PubMed |

Sørensen K, Van den Broucke S, Pelikan JM, et al. (2013) Measuring health literacy in populations: illuminating the design and development process of the European Health Literacy Survey Questionnaire (HLS-EU-Q). BMC Public Health 13, 948.

| Crossref | Google Scholar | PubMed |

Tseng H-M, Liao S-F, Wen Y-P, et al. (2018) Adaptation and validation of a measure of health literacy in Taiwan: the newest vital sign. Biomedical Journal 41, 273-278.

| Crossref | Google Scholar | PubMed |

Warnecke RB, Johnson TP, Chávez N, et al. (1997) Improving question wording in surveys of culturally diverse populations. Annals of Epidemiology 7, 334-342.

| Crossref | Google Scholar | PubMed |

Weiss BD, Mays MZ, Martz W, et al. (2005) Quick assessment of literacy in primary care: the newest vital sign. Annals of Family Medicine 3, 514-522.

| Crossref | Google Scholar | PubMed |